In an era of on-demand video interviews, the challenge for hiring teams is no longer just evaluating what candidates say — it’s understanding how they say it. As AI tools and content generation become mainstream, many organisations are asking the same question: How can we be sure we’re seeing the real person behind the screen?

That’s exactly why we developed the Authenticity Indicator.

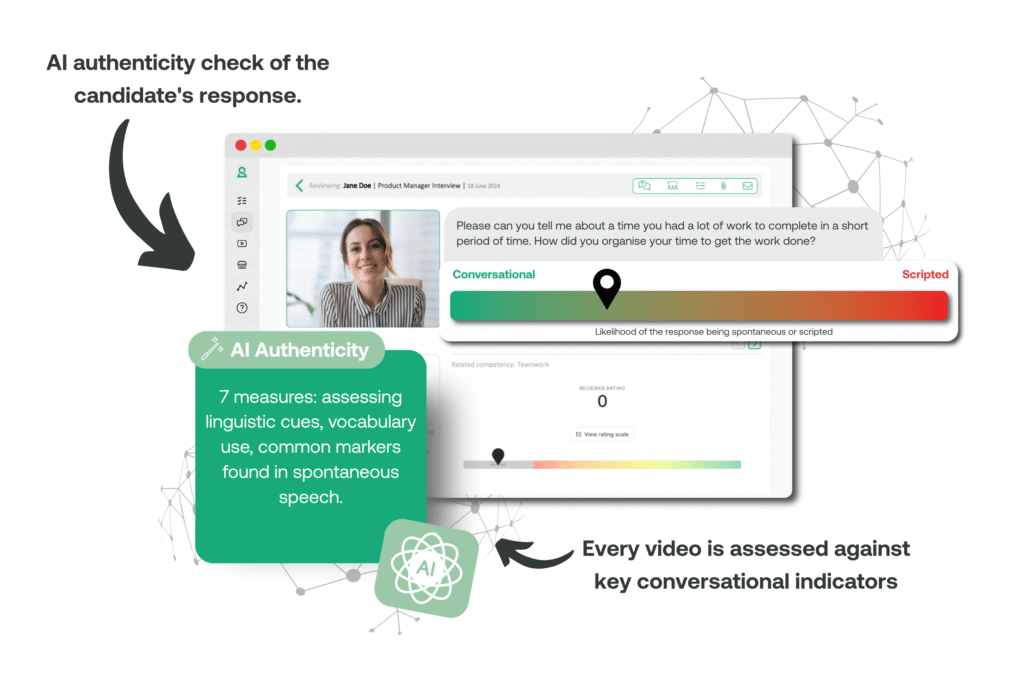

Launched almost a year ago — and now in its third iteration — the Authenticity Indicator gives hiring teams a new way to assess how naturally a candidate is speaking. While others in the market are only just entering this space, we’ve been refining our model with large-scale data from real interviews, ensuring greater accuracy, insight, and consistency with every update.

Why authenticity matters

Video interviews are designed to replicate real-world conversations — spontaneous, reflective, and in the moment. But in an asynchronous format, candidates can plan, rehearse, and even script their responses in ways that wouldn’t be possible in a live setting.

While preparation is often a sign of commitment, overly scripted responses can mask communication style, agility of thought, and personality — the very things hiring teams are trying to uncover.

The Authenticity Indicator helps surface this distinction — empowering reviewers with a richer context for interpreting what they’re seeing and hearing.

How it works

The Authenticity Indicator uses a blend of AI and proprietary algorithms to assess how a response is delivered — not just what’s being said. It analyses seven key measures, looking at linguistic cues, vocabulary use, and common markers found in spontaneous speech.

Rather than rely on unreliable visual signals like eye movement, the model focuses on how candidates naturally form and adjust their language in real time — giving reviewers an extra layer of insight to support fair and confident decisions.

Importantly, this tool doesn’t replace human judgement. It complements it — enhancing the quality of review rather than automating it.

What we’re learning

Since launching the Authenticity Indicator, we’ve analysed thousands of candidate responses across different sectors, job levels, and question types. One consistent and powerful insight has emerged: the way a question is asked plays a significant role in how authentic the response is.

Questions that invite personal reflection — such as overcoming challenges or identifying development areas — tend to elicit far more natural, conversational answers. On the other hand, questions that require factual recall or researched knowledge (like asking about industry trends or a company’s mission) are more likely to trigger a scripted response.

This insight isn’t just theoretical. It’s already helping our clients design smarter, more effective question sets — ones that bring out the human behind the application.

And thanks to the occupational psychology expertise within the Shortlister team, we’re not just analysing patterns — we’re actively applying best practice in assessment design to help organisations ask better questions, encourage authenticity, and create fairer evaluation experiences.

This is just the start. As we continue to learn from real-world data at scale, we’re uncovering more ways to help hiring teams not only detect authenticity — but actively encourage it.

Want to explore what this could mean for your process? We’d love to show you.

Want to see it in action?

Get in touch to see how the Authenticity Indicator works within the Shortlister platform — and how it can support faster, fairer and more confident decision-making.